Upload a Dataset

In the Sampling section, you are provided a set of simulations to be run using their conventional simulator. These completed simulations are uploaded in the Datasets section.

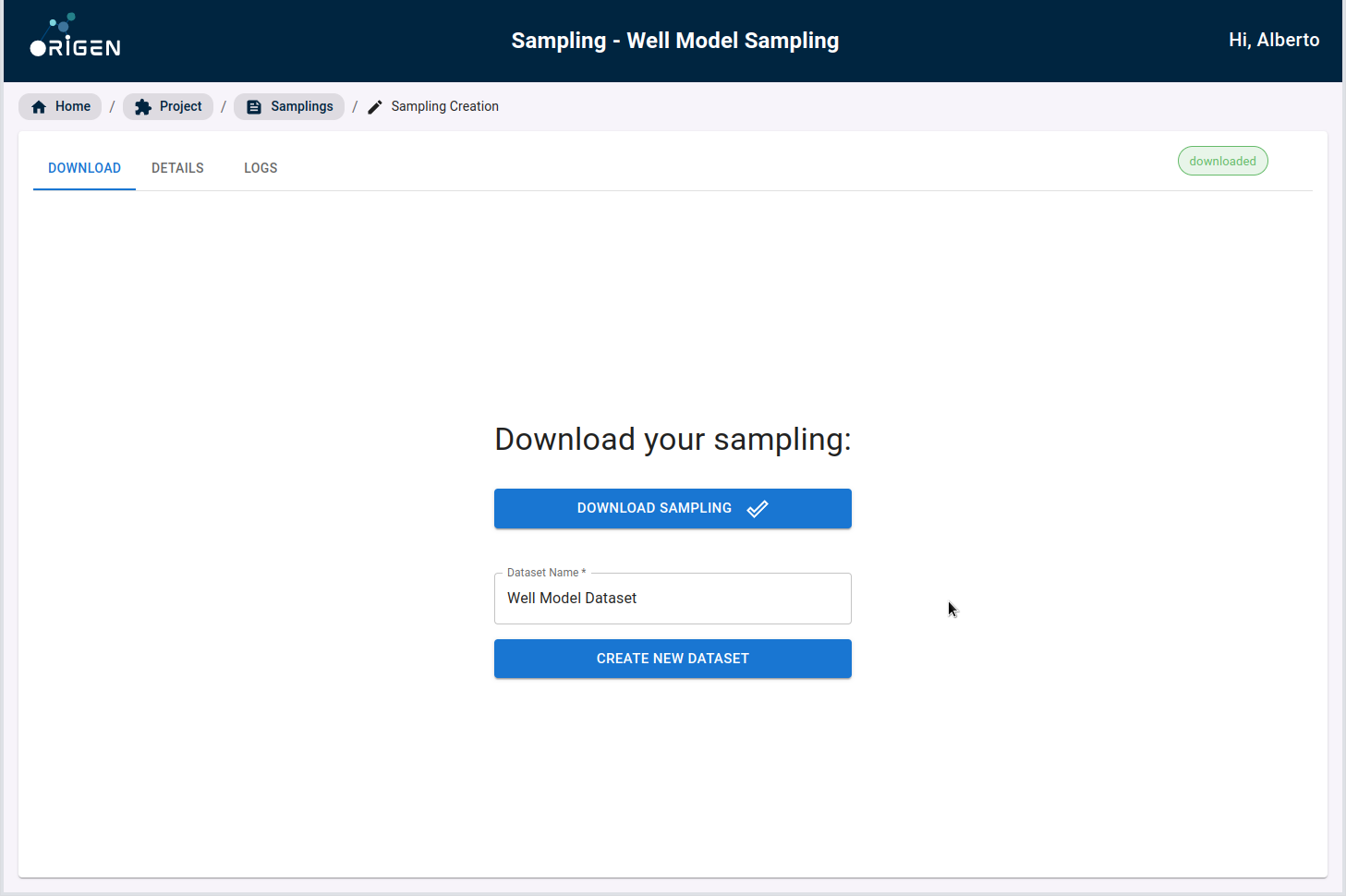

In order to create a dataset, you should select an already finished sampling, and click the "Create new dataset" button.

Note You need to click on Download sampling button to activate the create dataset button

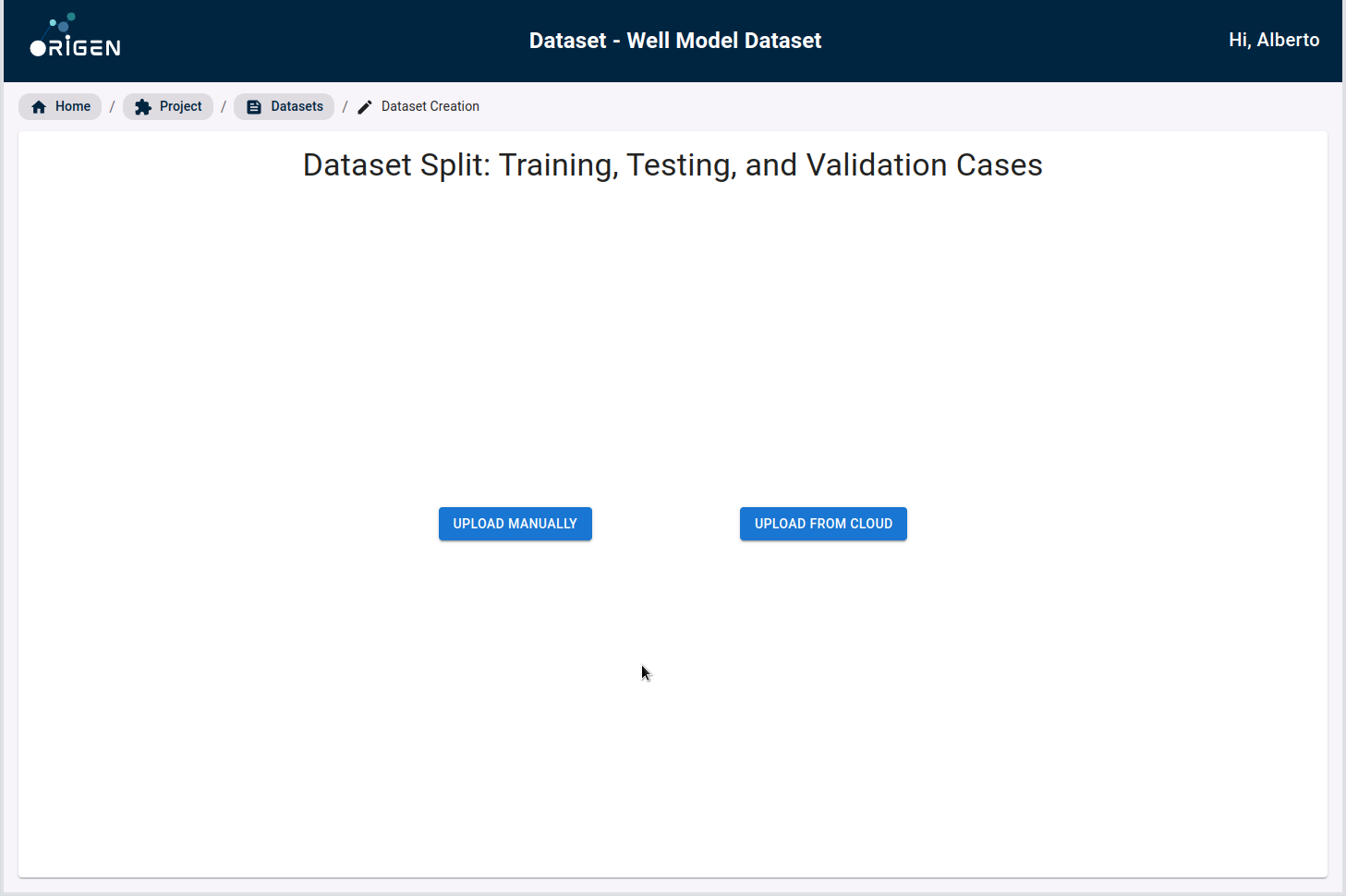

Once yoy click in Create new dataset, you will be directly directed to the view of the newly created dataset. From there, you can choose to upload your dataset directly from your Azure Blob Storage, or from your computer's filesystem.

Uploading a dataset directly from cloud

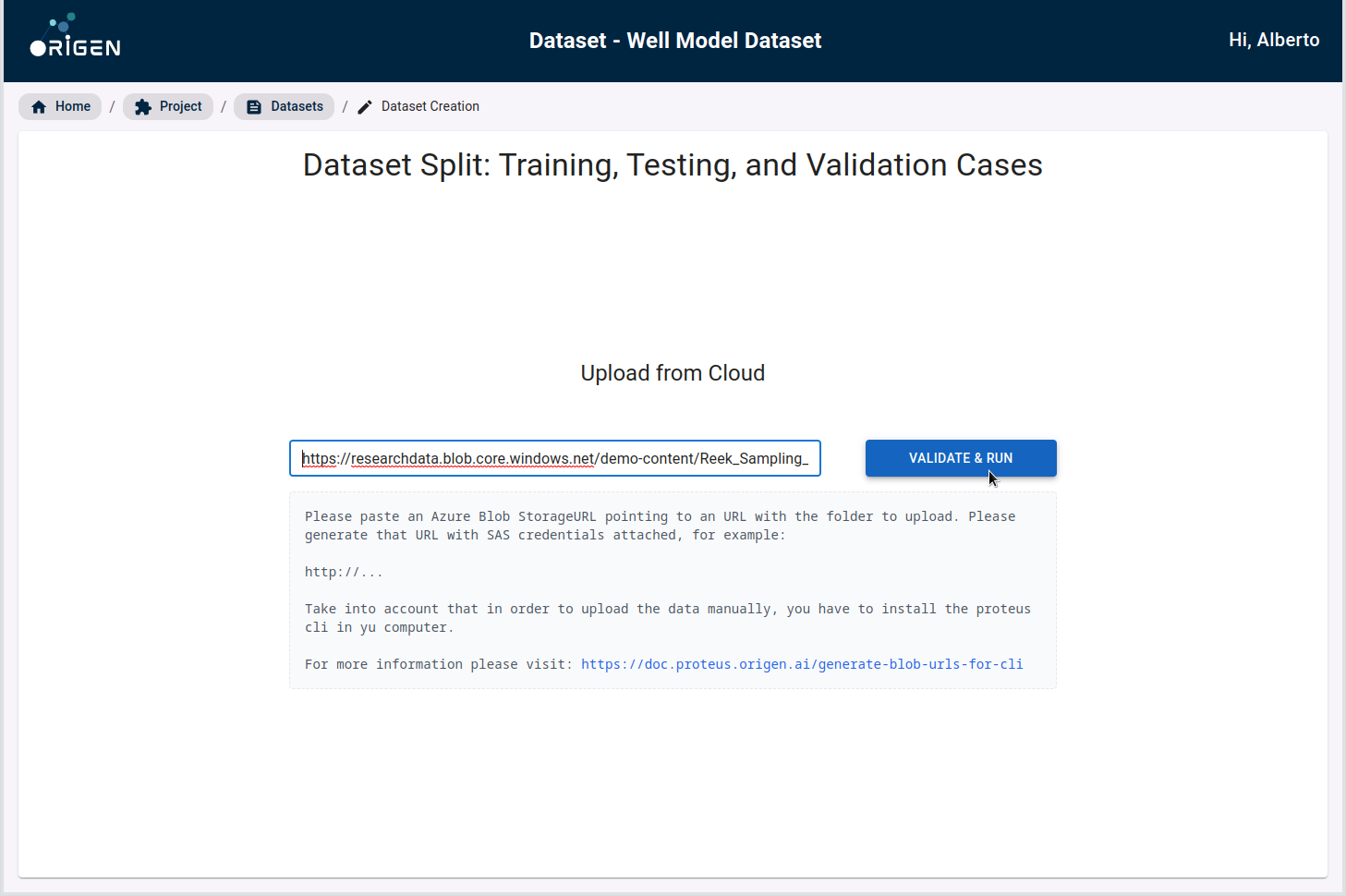

Click on the Upload from Cloud button. You will be presented a view where you can paste a Blob Storage SAS URL referencing your dataset.

Blob storage URL with Sample Reek Data

https://researchdata.blob.core.windows.net/demo-content/Reek_Sampling_small/?sv=2021-10-04&st=2023-06-23T08%3A50%3A53Z&se=2026-06-24T08%3A50%3A00Z&sr=c&sp=rl&sig=%2BDpWg%2BxyuEsFBnuhybKbPu52SA3b%2BLliuJ3waEKE2sc%3D

Paste your Blob Storage SAS URL in the input box and click "Validate & Run".

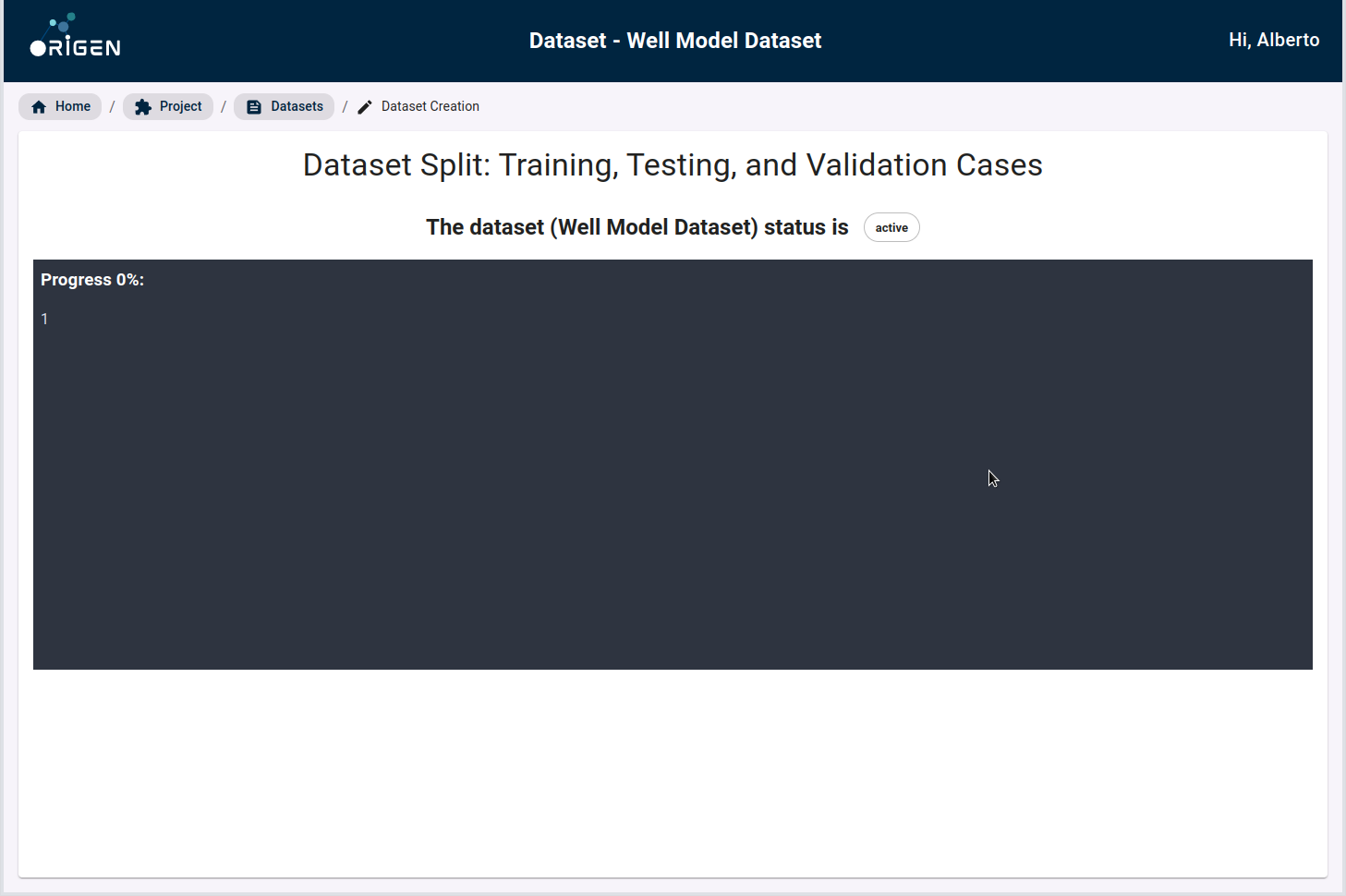

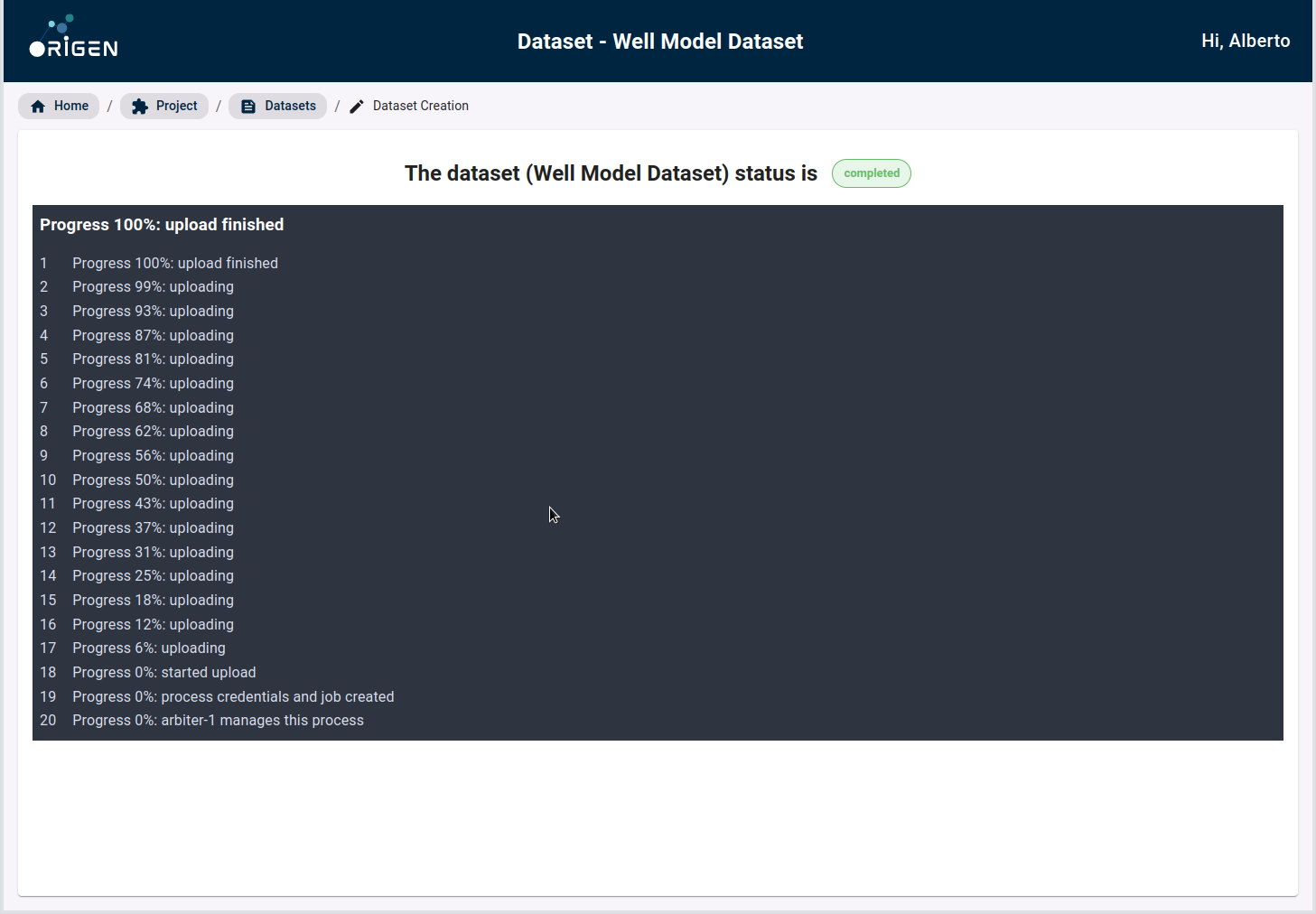

After a few minutes, your dataset upload will be completed

Uploading a dataset from your computer

Click on the Upload Manually button. You will be presented a view where you can generate the credentials and the command to run the dataset import process from your own computer.

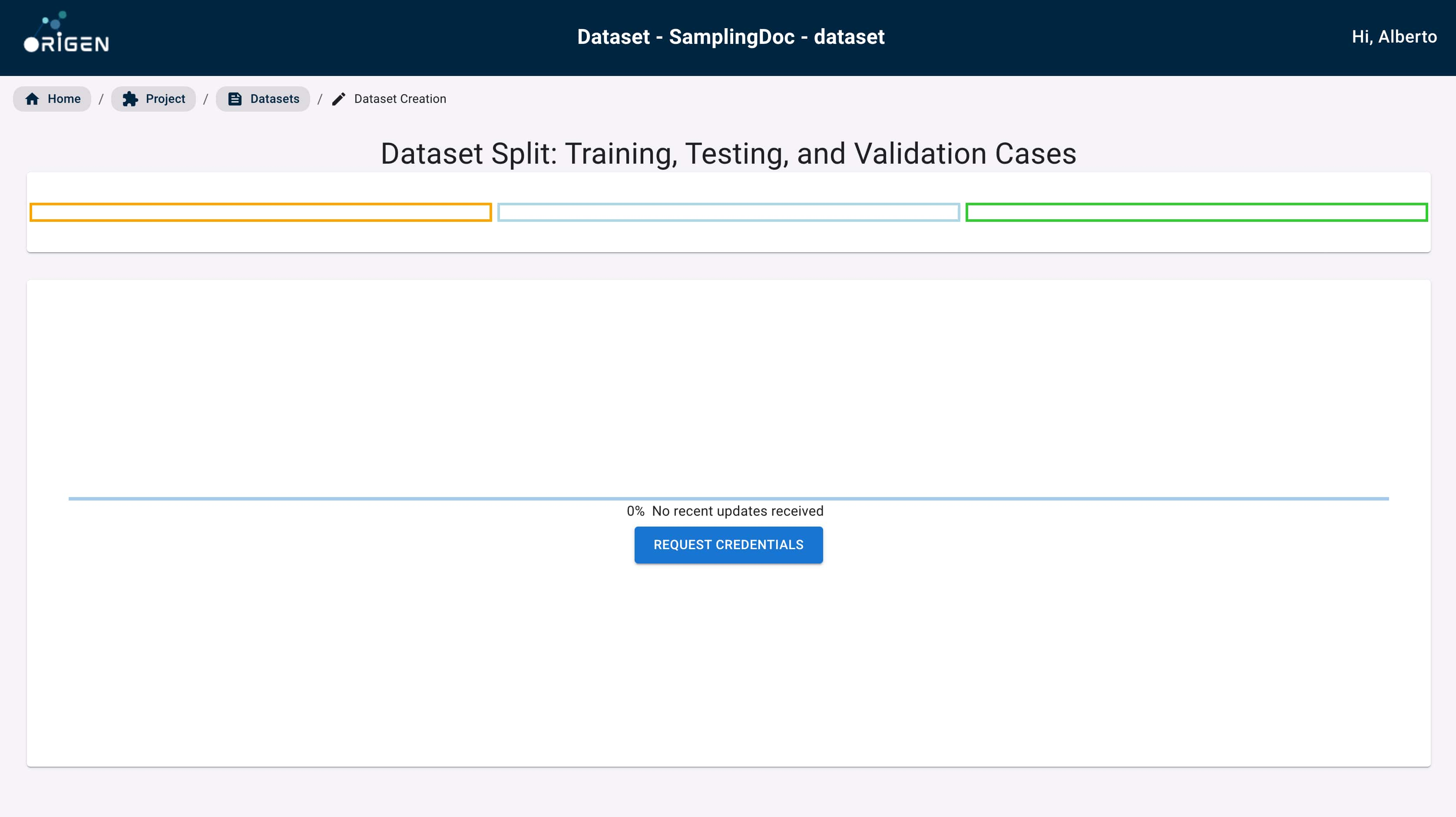

After that, you will be presented with the upload dataset progress view. In Proteus, data must be uploaded using the Proteus CLI.

Click on the "Request credentials" button to generate a set of credentials to be used in the command prompt.

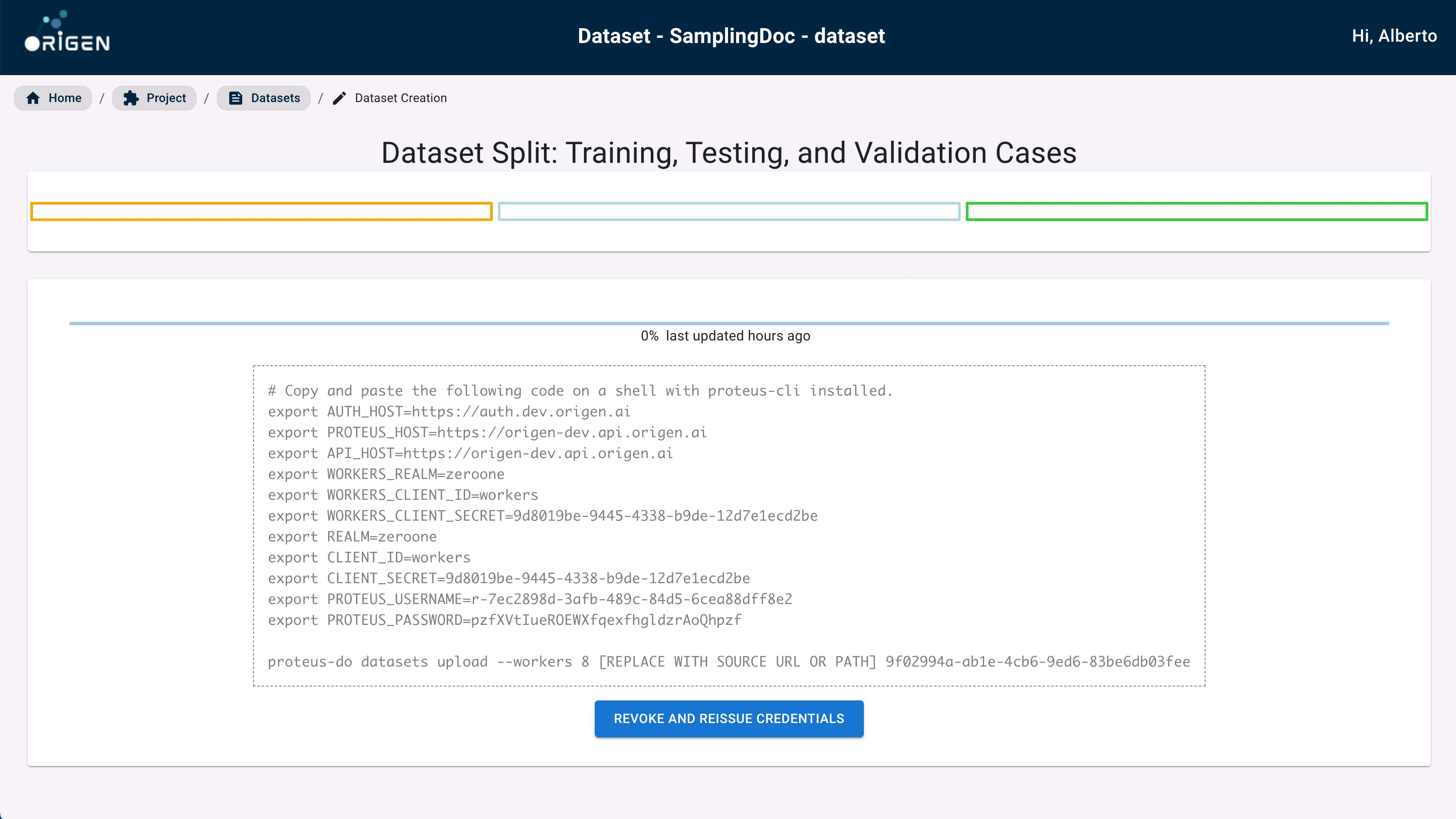

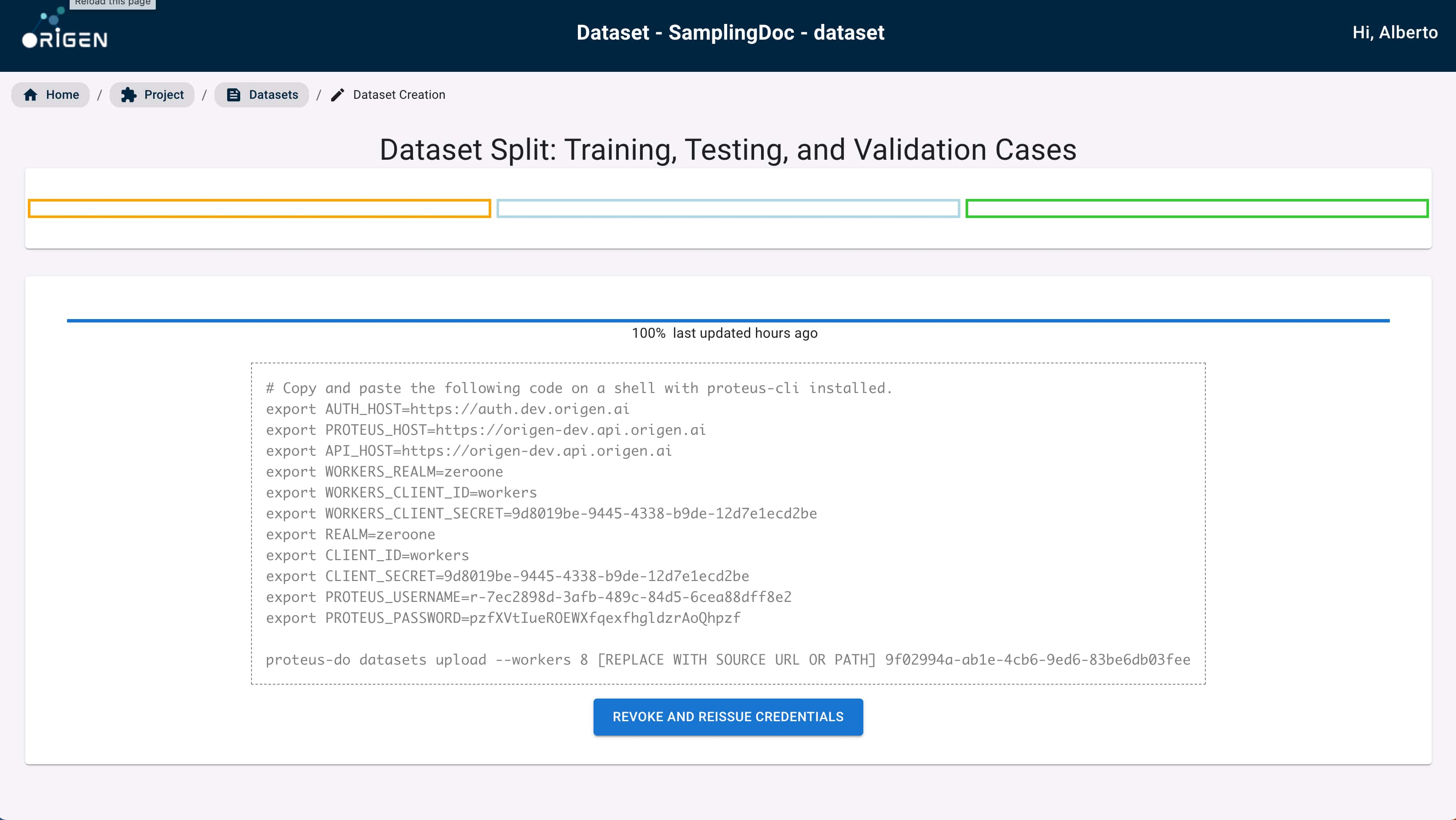

After few seconds, you should be presented with a list of environmental variable definitions and a partially constructed command that directly pre-process and uploads your data to the dataset currently being displayed.

Replace the content of [REPLACE WITH SOURCE URL OR PATH] either with a path to a folder

in you local system or a reference to a AZ Blob storage folder and paste it in your terminal.

After that, run the command.

$> # Copy and paste the following code on a shell with proteus-cli installed.

export AUTH_HOST=https://auth.dev.origen.ai

export PROTEUS_HOST=https://proteus-azure.dev.origen.ai

export API_HOST=https://proteus-azure.dev.origen.ai

export WORKERS_REALM=zeroone

export WORKERS_CLIENT_ID=workers

export WORKERS_CLIENT_SECRET=9d8019be-9445-4338-b9de-12d7e1ecd2be

export REALM=zeroone

export CLIENT_ID=workers

export CLIENT_SECRET=9d8019be-9445-4338-b9de-12d7e1ecd2be

export PROTEUS_USERNAME=r-00099f45-10d7-41d4-ab11-33bec9105af5

export PROTEUS_PASSWORD=****

$> proteus-do datasets upload \

--replace --workers 8

Reek_Sampling_small// 3f20b00f-0562-4290-9b44-0fe0b5cb26ca

User [r-00099f45-10d7-41d4-ab11-33bec9105af5]:

Password [kzlSGPNlobmuprxBlFgXzrwFeBeNWxfi]:

2022-12-22 15:00:33,325 proteus.logger - INFO:Welcome, unit r-00099f45-10d7-41d4-ab11-33bec9105af5

2022-12-22 15:00:35,161 proteus.logger - INFO:This process will use 8 simultaneous threads.

2022-12-22 15:00:35,161 proteus.logger - INFO:started upload

2022-12-22 15:00:40,294 numexpr.utils - INFO:NumExpr defaulting to 8 threads.

File uploaded: cases/testing/SIMULATION_1/WOPR.h5: 100%|███████████| 10/10 [00:50<00:00, 7.22s/it]

Eventually the command will end. If the dataset was uploaded correctly, the message 100% Upload complete

should be displayed in your browser.

If you are able to download the dataset files, the structure should look as the one here (bit.ly/43WJGoq).